After my previous post comparing the neoclassical synthesis in its various versions to the mind-body problem, there was an interesting Twitter exchange between Steve Randy Waldman and David Andolfatto in which Andolfatto queried whether Waldman and I are aware that there are representative-agent models in which the equilibrium is not Pareto-optimal. Andalfatto raised an interesting point, but what I found interesting about it might be different from what Andalfatto was trying to show, which, I am guessing, was that a representative-agent modeling strategy doesn’t necessarily commit the theorist to the conclusion that the world is optimal and that the solutions of the model can never be improved upon by a monetary/fiscal-policy intervention. I concede the point. It is well-known I think that, given the appropriate assumptions, a general-equilibrium model can have a sub-optimal solution. Given those assumptions, the corresponding representative-agent will also choose a sub-optimal solution. So I think I get that, but perhaps there’s a more subtle point that I’m missing. If so, please set me straight.

But what I was trying to argue was not that representative-agent models are necessarily optimal, but that representative-agent models suffer from an inherent, and, in my view, fatal, flaw: they can’t explain any real macroeconomic phenomenon, because a macroeconomic phenomenon has to encompass something more than the decision of a single agent, even an omniscient central planner. At best, the representative agent is just a device for solving an otherwise intractable general-equilibrium model, which is how I think Lucas originally justified the assumption.

Yet just because a general-equilibrium model can be formulated so that it can be solved as the solution of an optimizing agent does not explain the economic mechanism or process that generates the solution. The mathematical solution of a model does not necessarily provide any insight into the adjustment process or mechanism by which the solution actually is, or could be, achieved in the real world. Your ability to find a solution for a mathematical problem does not mean that you understand the real-world mechanism to which the solution of your model corresponds. The correspondence between your model may be a strictly mathematical correspondence which may not really be in any way descriptive of how any real-world mechanism or process actually operates.

Here’s an example of what I am talking about. Consider a traffic-flow model explaining how congestion affects vehicle speed and the flow of traffic. It seems obvious that traffic congestion is caused by interactions between the different vehicles traversing a thoroughfare, just as it seems obvious that market exchange arises as the result of interactions between the different agents seeking to advance their own interests. OK, can you imagine building a useful traffic-flow model based on solving for the optimal plan of a representative vehicle?

I don’t think so. Once you frame the model in terms of a representative vehicle, you have abstracted from the phenomenon to be explained. The entire exercise would be pointless – unless, that is, you assumed that interactions between vehicles are so minimal that they can be ignored. But then why would you be interested in congestion effects? If you want to claim that your model has any relevance to the effect of congestion on traffic flow, you can’t base the claim on an assumption that there is no congestion.

Or to take another example, suppose you want to explain the phenomenon that, at sporting events, all, or almost all, the spectators sit in their seats but occasionally get up simultaneously from their seats to watch the play on the field or court. Would anyone ever think that an explanation in terms of a representative spectator could explain that phenomenon?

In just the same way, a representative-agent macroeconomic model necessarily abstracts from the interactions between actual agents. Obviously, by abstracting from the interactions, the model can’t demonstrate that there are no interactions between agents in the real world or that their interactions are too insignificant to matter. I would be shocked if anyone really believed that the interactions between agents are unimportant, much less, negligible; nor have I seen an argument that interactions between agents are unimportant, the concept of network effects, to give just one example, being an important topic in microeconomics.

It’s no answer to say that all the interactions are accounted for within the general-equilibrium model. That is just a form of question-begging. The representative agent is being assumed because without him the problem of finding a general-equilibrium solution of the model is very difficult or intractable. Taking into account interactions makes the model too complicated to work with analytically, so it is much easier — but still hard enough to allow the theorist to perform some fancy mathematical techniques — to ignore those pesky interactions. On top of that, the process by which the real world arrives at outcomes to which a general-equilibrium model supposedly bears at least some vague resemblance can’t even be described by conventional modeling techniques.

The modeling approach seems like that of a neuroscientist saying that, because he could simulate the functions, electrical impulses, chemical reactions, and neural connections in the brain – which he can’t do and isn’t even close to doing, even though a neuroscientist’s understanding of the brain far surpasses any economist’s understanding of the economy – he can explain consciousness. Simulating the operation of a brain would not explain consciousness, because the computer on which the neuroscientist performed the simulation would not become conscious in the course of the simulation.

Many neuroscientists and other materialists like to claim that consciousness is not real, that it’s just an epiphenomenon. But we all have the subjective experience of consciousness, so whatever it is that someone wants to call it, consciousness — indeed the entire world of mental phenomena denoted by that term — remains an unexplained phenomenon, a phenomenon that can only be dismissed as unreal on the basis of a metaphysical dogma that denies the existence of anything that can’t be explained as the result of material and physical causes.

I call that metaphysical belief a dogma not because it’s false — I have no way of proving that it’s false — but because materialism is just as much a metaphysical belief as deism or monotheism. It graduates from belief to dogma when people assert not only that the belief is true but that there’s something wrong with you if you are unwilling to believe it as well. The most that I would say against the belief in materialism is that I can’t understand how it could possibly be true. But I admit that there are a lot of things that I just don’t understand, and I will even admit to believing in some of those things.

New Classical macroeconomists, like, say, Robert Lucas and, perhaps, Thomas Sargent, like to claim that unless a macroeconomic model is microfounded — by which they mean derived from an explicit intertemporal optimization exercise typically involving a representative agent or possibly a small number of different representative agents — it’s not an economic model, because the model, being vulnerable to the Lucas critique, is theoretically superficial and vacuous. But only models of intertemporal equilibrium — a set of one or more mutually consistent optimal plans — are immune to the Lucas critique, so insisting on immunity to the Lucas critique as a prerequisite for a macroeconomic model is a guarantee of failure if your aim to explain anything other than an intertemporal equilibrium.

Unless, that is, you believe that real world is in fact the realization of a general equilibrium model, which is what real-business-cycle theorists, like Edward Prescott, at least claim to believe. Like materialist believers that all mental states are epiphenomenous, and that consciousness is an (unexplained) illusion, real-business-cycle theorists purport to deny that there is such a thing as a disequilibrium phenomenon, the so-called business cycle, in their view, being nothing but a manifestation of the intertemporal-equilibrium adjustment of an economy to random (unexplained) productivity shocks. According to real-business-cycle theorists, such characteristic phenomena of business cycles as surprise, regret, disappointed expectations, abandoned and failed plans, the inability to find work at wages comparable to wages that other similar workers are being paid are not real phenomena; they are (unexplained) illusions and misnomers. The real-business-cycle theorists don’t just fail to construct macroeconomic models; they deny the very existence of macroeconomics, just as strict materialists deny the existence of consciousness.

What is so preposterous about the New-Classical/real-business-cycle methodological position is not the belief that the business cycle can somehow be modeled as a purely equilibrium phenomenon, implausible as that idea seems, but the insistence that only micro-founded business-cycle models are methodologically acceptable. It is one thing to believe that ultimately macroeconomics and business-cycle theory will be reduced to the analysis of individual agents and their interactions. But current micro-founded models can’t provide explanations for what many of us think are basic features of macroeconomic and business-cycle phenomena. If non-micro-founded models can provide explanations for those phenomena, even if those explanations are not fully satisfactory, what basis is there for rejecting them just because of a methodological precept that disqualifies all non-micro-founded models?

According to Kevin Hoover, the basis for insisting that only micro-founded macroeconomic models are acceptable, even if the microfoundation consists in a single representative agent optimizing for an entire economy, is eschatological. In other words, because of a belief that economics will eventually develop analytical or computational techniques sufficiently advanced to model an entire economy in terms of individual interacting agents, an analysis based on a single representative agent, as the first step on this theoretical odyssey, is somehow methodologically privileged over alternative models that do not share that destiny. Hoover properly rejects the presumptuous notion that an avowed, but unrealized, theoretical destiny, can provide a privileged methodological status to an explanatory strategy. The reductionist microfoundationalism of New-Classical macroeconomics and real-business-cycle theory, with which New Keynesian economists have formed an alliance of convenience, is truly a faith-based macroeconomics.

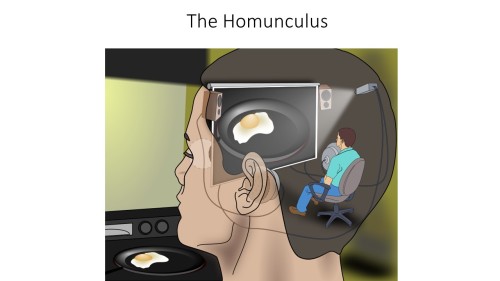

The remarkable similarity between the reductionist microfoundational methodology of New-Classical macroeconomics and the reductionist materialist approach to the concept of mind suggests to me that there is also a close analogy between the representative agent and what philosophers of mind call a homunculus. The Cartesian materialist theory of mind maintains that, at some place or places inside the brain, there resides information corresponding to our conscious experience. The question then arises: how does our conscious experience access the latent information inside the brain? And the answer is that there is a homunculus (or little man) that processes the information for us so that we can perceive it through him. For example, the homunculus (see the attached picture of the little guy) views the image cast by light on the retina as if he were watching a movie projected onto a screen.

But there is an obvious fallacy, because the follow-up question is: how does our little friend see anything? Well, the answer must be that there’s another, smaller, homunculus inside his brain. You can probably already tell that this argument is going to take us on an infinite regress. So what purports to be an explanation turns out to be just a form of question-begging. Sound familiar? The only difference between the representative agent and the homunculus is that the representative agent begs the question immediately without having to go on an infinite regress.

PS I have been sidetracked by other responsibilities, so I have not been blogging much, if at all, for the last few weeks. I hope to post more frequently, but I am afraid that my posting and replies to comments are likely to remain infrequent for the next couple of months.

David,

You might be interested in this construction of a representative agent that has entirely different properties than the micro agents

http://informationtransfereconomics.blogspot.com/2015/09/the-emergent-representative-agent-1.html

In particular, the representative agent has well defined preferences and consumption smoothing while micro agents are random.

LikeLike

Interesting. I have also written on this topic on the basis of your previous post :

LikeLike

David, I think you should check out Jason Smith’s stuff. The basic idea is that you do not need a detailed model of human behavior to derive economic results. For example, you can take individual agents who behave entirely randomly (subject to budget constraints) and derive a supply-demand model that reaches equilibrium (with very high probability), much the way a room full of gas molecules bouncing randomly about is likely to wind up with uniform temperature, pressure, etc., with very high probability (because entropy is exceedingly unlikely to ever increase significantly for any significant time period). No need for any stories of utility maximization, preferences, etc.

For example, here:

http://informationtransfereconomics.blogspot.com/2014/06/the-information-transfer-model.html

This direction strikes me as a significant advance over relying on representative agent behavior to find equilibria.

-Ken

LikeLike

While I am sympathetic to your criticisms of modern macro modelling, and am myself a proponent of broadening the acceptable methodologies in economics, I also think that this post seriously underestimates the power of “representative agent” models.

New monetarist models for example use symmetry and randomization to allow a “representative agent” framework to capture many aspects of the behavior of different types of agents. For example, every agent can produce/consume a different type of good. By constructing the problem symmetrically, there is meaningful trade, a need for a means of exchange, etc. — even though representative agent methodology is being used to solve the problem. Thus, I am far from convinced that important aspects of your “traffic flow” problem cannot be captured in a representative agent framework.

On the other hand, the focus on symmetry may (or may not) impose limits on the methodology that keep it from answering certain questions. In short, it’s important not to underestimate the creativity of the people who use these tools.

For an overview of new monetarism see: https://research.stlouisfed.org/publications/review/10/07/Williamson.pdf and the accompanying Handbook of Monetary Economics article.

LikeLike

Splendid, David. you know how to say with precision my more or less confused tuitions. That remember me the (obviously false) Plato´s theory of previous insider knowledge. False but a beautiful theory which sometimes I I adhere to, because in some context, is an exact explanation of the process of teaching and learning.

LikeLike

Did you know that Jennifer La’O has a model called “A Traffic Jam Theory of Recessions” ? You can access it at her website at Columbia University

LikeLike

Jason, Thanks for the link and your follow up post as well. Your argument reminds of the paper “Irrational Behavior and Economic Theory published in JPE in 1962 and reprinted in Becker’s The Economic Approach to Human Behavior in which he showed that budget constraints were sufficient to imply negatively sloped demand curves and other standard microeconomic results. He credited Alchian’s 1950 paper in JPE “Uncertainty, Evolution, and Economic Theory” for anticipating his argument. As I recall, Israel Kirzner wrote a comment published by JPE criticizing Becker for not sticking with utility maximization. It might help you to use Becker’s argument as a way of improving your communication with economists who, if they are like me, have trouble comprehending arguments that aren’t made in the language we’re accustomed to speaking. One other point to consider is that in an intertemporal context with incomplete markets there is no such thing as a true budget constraint because the prices in the budget constraint are largely expected prices not actual prices, so if prices turn out to differ from those that were expected, budget constraints may be violated (households or firms go bankrupt).

C.H. Thanks. I saw the post you linked to but was only able to read it quickly without fully comprehending it, but I think I agreed with a lot of it, and it actually did help me make the argument in this post.

Ken, Thanks for your summary of what Jason is trying to do which helped me see the similarity between what he is trying to do and Becker’s argument in his 1962 paper.

csissoko, I am going to try to read the Williamson and Wright paper, which I had filed away previously but have not studied. I am aware that there are models with heterogeneous agents, and these models may indeed be able to take into account interactions between agents in a way that the simpler models do not. So my criticisms don’t necessarily apply to those models, but I don’t think those models have yet gotten very far, because they are hard to work with analytically.

Miguel, Many thanks for your kind words.

LikeLike

David, another interesting post! Like Kenneth Duda, I too am a fan of both Jason’s blog and your blog. You write above:

“Jason, Thanks for the link and your follow up post as well.”

I’m not certain what you mean by “follow up post” but if by chance it’s not this (his post today regarding yours here), … well … you have it now (note that he has a question for you in there in footnote [1]). Also, if you’d like a more formal and centralized write up, he has one in the form of a paper (in a journal submission friendly format), here:

http://arxiv.org/abs/1510.02435

As you can probably guess, I’m no genius, but I made it through the 1st 25% and understand it. The math so far hasn’t been too bad.

Also, I have not yet met up with my pub-quiz / fMRI scientist acquaintance, but perhaps tomorrow night. I want to ask him about his opinion of free will. If not, perhaps I’ll just email him. More to come on that!

LikeLike

“The modeling approach seems like that of a neuroscientist saying that, because he could simulate the functions, electrical impulses, chemical reactions, and neural connections in the brain – which he can’t do and isn’t even close to doing, even though a neuroscientist’s understanding of the brain far surpasses any economist’s understanding of the economy – he can explain consciousness. Simulating the operation of a brain would not explain consciousness, because the computer on which the neuroscientist performed the simulation would not become conscious in the course of the simulation.”

How do you know the computer would not become conscious? Correct me if I’m mistaken, but other than you tacitly assume it, I can’t see how you can know that.

More generally, and with due respect, I’d suggest you reconsider your opinions on materialism and provide at least an sketch on your own non-materalist views.

LikeLike

David,

I agree with your conclusion about the neoclassical economics, but I do not necessarily agree with philosophical parts of your reasoning. As far as I learned from the philosophy books, the question of ‘existence’ of consciousness/qualia ultimately devolves to the conflict between nominalism and realism. Do ideal objects, like numbers, ‘exist’? There is probably no satisfactory answer to that beyond Wittgenstein’s “Whereof one cannot speak, thereof one must be silent”.

The problem is not that neoclassical economists try to aggregate micro-level entities into macro-level entities, the problem is that they do that in a shody manner. Imagine if a 19th century thermodynamics psysicist decided that because gas is an intereaction of billions of molecules and you can’t easily solve the problem of their interaction, the answer is to model gas as a ‘representative molecule’. He’d be laughed out of profession by his colleagues. Instead we got appropriate models like classical thermodynamics for studying the emergent properties of molecular interaction and statistical physics which delves more deeply into how those emergent properties emerge. And, y’know, internal combustion engines.

The point is, I guess, that little depends on whether some metaphysical entity exists at the highest level of aggregation. Does ‘economy’ ‘exist’? Does ‘consciousness’ ‘exist’? Does ‘reality’ ‘exist’? Neurochemistry bypasses the problems like the existence of ghost in the machine by studying the behavior of things that can be studied, like dophamine receptors. Likewise, there are things that can be studied on micro- and macro-levels of economics, but they must be threated in a reasonable manner.

LikeLike

All the critics to David´post are more or less reasonable, but are you taking into account that models based on reprentative rational agent can not explain the facts as 2007-2008 crisis?

LikeLike

David,

Thank you for the reference — I am indeed using the same argument as section 2 of Becker’s “Irrational Behavior and Economic Theory”. I add only 1) an argument that if the number of different goods d being optimized (the number of axes) is large, there is no need to restrict to the budget constraint (as ‘maximization’ happens automatically as d → ∞), 2) an interpretation of the equilibrium in terms of entropy and 3) the possibility of falling away from the ‘maximum’.

Regarding the intertemporal budget constraint, I completely agree you are right — it is subject to expectations and thwarted plans. However, I think there are (information theory) bounds that can help understand the long term trend. Those expectations and thwarted plans lead to information loss and e.g. asset prices or output that fall below that ‘information equilibrium’ trend … i.e. a recession. These information equilibrium relationships make sense (IMHO) of the nonsensical accounting identity approaches you talked about earlier this year.

Both the Becker argument and ‘information equilibrium’ are part of a single framework — Tom Brown kindly linked to the paper about the latter I put up on arXiv.org above.

LikeLike

David,

Does your argument apply to IS/LM?

Also, what do you believe is the alternative? Do you believe there are schools of though, economists or types of modeling that achieves your desired results. In other words, putting aside philosophy, what precise direction would you like to see macro modeling to go?

LikeLike

David, thanks for a very interesting post! You write:

“It graduates from belief to dogma when people assert not only that the belief is true but that there’s something wrong with you if you are unwilling to believe it as well.”

I promise I won’t do that. :^D

“Simulating the operation of a brain would not explain consciousness, because the computer on which the neuroscientist performed the simulation would not become conscious in the course of the simulation.”

How do you know that? We’ve successfully built artificial replacement neurons (e.g. in the form of cochlear implants) to replace missing or damaged biological neurons. These artificial neurons are apparently successful in replicating the important functions of the neurons they replace, and that’s all that’s required for the patient who can again experience sound. In principle, what’s to stop us from replacing each and every nerve in somebody’s brain (one at a time) with artificial nerves designed to replicate the important consciousness relevant information processing and storage functions of the nerve cells they replace? How could you claim the resulting artificial nerve network wouldn’t be conscious? How would the result fundamentally differ from the simulation you speak of? Perhaps I’m wrong and it would be fundamentally different, but at least there’s a conceivable path to someday performing a test of this: namely constructing such a simulation and testing it for consciousness.

“Many neuroscientists and other materialists like to claim that consciousness is not real, that it’s just an epiphenomenon.”

I think you’ll find plenty of materialists (including me) that will agree with you that consciousness is real. I don’t see that at odds with also saying that it’s an emergent property of a network of neurons.

Let me quote physicist Sean Carroll on this:

“…in the face of admittedly incomplete understanding, we evaluate the relative merits of competing hypotheses. In this case, one hypothesis says that the operation of the brain is affected in a rather ill-defined way by influences that are not described by the known laws of physics, and that these effects will ultimately help us make sense of human consciousness; the other says that brains are complicated, so it’s no surprise that we don’t understand everything, but that an ultimate explanation will fit comfortably within the framework of known fundamental physics. This is not really a close call; by conventional scientific measures, the idea that known physics will be able to account for the brain is enormously far in the lead. To persuade anyone otherwise, you would have to point to something the brain does that is in apparent conflict with the Standard Model or general relativity. (Bending spoons across large distances would qualify.) Until then, the fact that something is complicated isn’t evidence that the particular collection of atoms we call the brain obeys different rules than other collections of atoms.”

From his post called

“Seriously, The Laws Underlying The Physics of Everyday Life Really Are Completely Understood.”

From another of his posts entitled “Physics and the Immortality of the Soul” I’ve taken the liberty of modifying an excerpt to make it more relevant to the mind body problem:

———————— start modified quote ———————

Within quantum field theory, there can’t be a new collection of “disembodied mind (DM) particles” and “DM forces” that interact with our regular atoms, because we would have detected them in existing experiments. Ockham’s razor is not on your side here, since you have to posit a completely new realm of reality obeying very different rules than the ones we know.

But let’s say you do that. How is the DM energy supposed to interact with us? Here is the equation that tells us how electrons behave in the everyday world:

i\gamma^\mu \partial_\mu \psi_e – m \psi_e = ie\gamma^\mu A_\mu \psi_e – \gamma^\mu\omega_\mu \psi_e .

Dont’ worry about the details; it’s the fact that the equation exists that matters, not its particular form. It’s the Dirac equation — the two terms on the left are roughly the velocity of the electron and its inertia — coupled to electromagnetism and gravity, the two terms on the right.

As far as every experiment ever done is concerned, this equation is the correct description of how electrons behave at everyday energies. It’s not a complete description; we haven’t included the weak nuclear force, or couplings to hypothetical particles like the Higgs boson. But that’s okay, since those are only important at high energies and/or short distances, very far from the regime of relevance to the human brain.

If you believe in an DM that interacts with our bodies, you need to believe that this equation is not right, even at everyday energies. There needs to be a new term (at minimum) on the right, representing how the DM interacts with electrons. (If that term doesn’t exist, electrons will just go on their way as if there weren’t any DM at all, and then what’s the point?) So any respectable scientist who took this idea seriously would be asking — what form does that interaction take? Is it local in spacetime? Does the DM respect gauge invariance and Lorentz invariance? Does the DM have a Hamiltonian? Do the interactions preserve unitarity and conservation of information?

———————— end modified quote ———————-

Regarding the homunculus idea, what if instead animal or human consciousness can be defined as a particular pattern of information processing, such that any information processor (computer, brain, etc) partaking in this class of information processing can be said to be conscious in the same manner biological animals are? Again, I claim this is an idea that can (in principle anyway) be tested: we can build non-brain information processors which objectively meet the information processing requirements of our defined class of animal brain processors and then test them to see if they are indeed conscious using the same tests for consciousness we apply to biological creatures.

Finally, I think your analogy between materialist cognitive scientists and micro-foundations modeling macro-economists would be more accurate if those materialists were using only one or a small number of representative brain cells in their models and were also claiming they’d successfully modeled consciousness with those models. Regardless of their method, I’m not aware of any materialists who claim they have a working consciousness model.

LikeLike

Source for my modified quote above: “Physics and the Immortality of the Soul”

LikeLike

David, I think a lot of people feel that the methodological approach in modern macro is just not quite right.

And I think you have nailed it what it is. This was one of the best critiques I have read.

LikeLike

Tom, As usual, because our vocabularies are so different, I’m not sure that I understand what Jason is saying, but I think that I would agree with him about an emergent representative agent. The problem with an emergent representative agent is that you need to explain emergence before you know what the emergent representative agent actually looks like, so I don’t see that arguing in terms of an emergent representative agent actually accomplishes anything. It still seems to me like a form of question begging.

Harry, You’re right I don’t know that the computer would not become conscious. But no neuroscientist knows that it would, and when neuroscientists or materialists claim that consciousness is entirely explicable in terms of physical/material causes, they are tacitly assuming that the computer would be conscious. I think John Searle and Colin McGinn and before them Karl Popper have explained a non-materialst theory of consciousness very ably. No need for a rank amateur like me to try to reproduce what they have already done.

Roman, I think that I agree for the most part with what you say, and I consider myself to be a nominalist. But there is a question whether some macro or higher level phenomenon that is made up of many parts or components can be reduced to the components. That is what is meant by emergent phenomena: something fundamentally different emerging out of the smaller component objects. If science can explain how the larger scale phenomenon can be reduced to the smaller scale phenomenon that’s great, but we have no reason to treat the larger scale phenomenon as if it were nothing but the aggregation of the smaller ones until we have a scientific explanation that shows the exact relationship between the larger and the smaller. Scientific reductionism says it’s a great achievement when we explain how the large scale phenomenon can be reduced to the smaller scale phenomenon. Philosophical reductionism says don’t bother talking about the larger scale phenomenon, because we already know that it has to be (regardless of whether we know how) reducible to the smaller phenomena.

Miguel, Just so.

Jason, Thanks, I’m glad that I have at least started to get a handle on what you have been talking about, and that there is a lot of common ground in our approaches.

Ilya, I don’t know what you mean by whether my argument applies to IS/LM. I am not a particular fan of IS/LM, but it can be useful for some purposes. I’m afraid that I don’t have any particular paradigm that I find superior to all others. I like Earl Thompson’s approach a lot. I wrote four posts about three years ago summarizing his paper “A Reformulation of Macroeconomic Theory.” I still have some more comments to make about it, but haven’t gotten around to doing so. It’s almost 40 years since he wrote the last of his unpublished drafts of the paper, so it would be worth spending some more time about it. But his model is too highly aggregated for my tastes, so I am not sure how much I trust its conclusions or policy implications.

Tom, As I said in my reply to Harry’s comment above, I don’t know it (but my intuition is pretty strong that the computer would not be conscious.

Materialists who say that consciousness is real but that it’s an emergent property of a neural network are either saying that consciousness is an epiphenomenon is the sense that nothing would change if we weren’t conscious except that we wouldn’t have the sensation of experiencing a mental life, or they are admitting that there is an emergent phenomenon that they can’t explain as being nothing more than the physical neural network. The whole point of the mind-body problem is that causation goes in both directions. Materialists seem to think that every time they show that the mind is affected by the brain they have proved their case. That’s just silly. They haven’t shown that the mind never affects the brain. Have you shed a tear when reading a novel? What is the material cause of that tear drop? Do you think a computer would ever experience a similar sensation after having Anna Karenina scanned into its memory?

Nanikore, Many thanks for your comment. Glad you liked it.

LikeLike

“Do you think a computer would ever experience a similar sensation after having Anna Karenina scanned into its memory?”

I suppose this sounds crazy to you, but given that it was designed to process information in the same way our brains do, I don’t see why not. I think Carroll (if he’s correct about everday physicis being “complete”) captures why: the non-materialist has to provide a falsifiable explanation for how this non-material force interacts with the material world (causing those tears to flow). The materialist doesn’t: he builds tear ducts into his model, and allows them to be controlled via the central processor. And we’ve never seen any evidence of this mysterious non-material force. We don’t see any “weird stuff” happening (nobody has taken James Randi’s $1 million), so what we’re left with is material objects with material inputs and material outputs. Complexity seems like the a priori best reason the puzzle of consciousness has not yet been solved, not new forces that bridgle an ill defined non-material world with the material.

“John Searle and Colin McGinn and before them Karl Popper have explained a non-materialst theory of consciousness very ably”

I will check that out. Maybe they will change my mind. Thanks David!

LikeLike

Re: tears: The materialist is still responsible, however, for demonstrating a completed model of consciousness which does not fail any test for consciousness, perhaps including those tears.

If I were in charge of a $10 billion dollar project to explain consciousness, I’m left wondering what possible argument the non-materialists could use to justify expenditure on their hypothesis. The materialists have an easy case to make: we need more computing power and better fMRI machines, etc (i.e. they’re fighting complexity and observability of a complex system, but otherwise they’re proposal falls comfortably withing the framework of known, everyday physics).

But then I haven’t read your references yet, so perhaps I should start there!

LikeLike

The materialists have one other advantage competing for my research $: they can propose an incremental approach: first we build an ant brain that we can demonstrate is utterly indistinguishable from an ant (this has already been done I believe… but if it hasn’t, a round worm might be better still: onlyh 300 neurons total there), then a lizard, then a mouse, then a dog, then a crow (crows have demonstrated they can count and solve problems rationally), then a dophin (dolphins can recognize themselves in a mirror), then a chimp etc.

How woudl the non-materialsts respond to that part of the materialists’ sales pitch? What incremental approach can they propose?

LikeLike

After a short search, this is one example (there’s an effort being made for bees too it looks like):

Click to access PhDStudentUK.pdf

So I don’t think that qualifiess as a complete neuron-for-neuron accurate ant (insect) brain model yet, but it’s not entirely clear. Looks like $1 trillion might be a more reasonable budget for my project… and maybe 100 years? Lol.

Some of the people involved certainly had ambitios goals in 2012:

“”Build a simulation of an insect brain to explain how bees perceive the world and learn about their

environment. Its more than just a bee flight simulator; you will design its auto-pilot brain capable

of learning to forage for food, learning to use landmarks for navigation, and explain phenomena

of complex cognition in bees. This is a rare opportunity to tightly couple software engineering

skills with real biological experiments in the same lab.”

“In this ambitious joint Biology and

Computer Science PhD you will program a simulation of a bee in an environment to explain how

such behaviour could be mediated by a miniature brain in situated and embodied tasks. The

simulated bee will have a visual system and a motor system, and will fly in a 3D environment.

The simulation must be reconfigurable to various levels of abstraction allowing modelling of

functions such as concept learning, reinforcement learning, landmark learning etc, as well as

lower level visually based flight functions, and purely reactive obstacle avoidance functions. It

will allow testing of various controllers, e.g. spiking neuronal networks, continuous time

recurrent neural networks, computer vision algorithms, cognitive architectures, etc.”

LikeLike

Sorry for all the mess above David. I’ll give it a rest.

Getting back to the subject of econ: Jason’s recent look at the intertemporal.

LikeLike

And this one, hot off the presses: directly addressing your “question begging” point above. Enjoy!

LikeLike

Tom, Your link doesn’t seem to be working

LikeLike

Economics in self-paralysis

Comment on ‘Representative Agents, Homunculi and Faith-Based Macroeconomics’

Since 1972 every smart economist can know from the Sonnenschein/Mantel/Debreu proof that General Equilibrium Theory is dead. What exactly follows from this?

It follows nothing less than this: Orthodoxy is dead. Why? Because it has been defined thus: “It is a touchstone of accepted economics that all explanations must run in terms of the actions and reactions of individuals. Our behavior in judging economic research, in peer review of papers and research, and in promotions, includes the criterion that in principle the behavior we explain and the policies we propose are explicable in terms of individuals, not of other social categories.” (Arrow, 1994, p. 1)

There is not the slightest ambiguity, according to this self-definition of Orthodoxy the representative agent approach is a priori out, inadmissible, a self-contradiction, a methodological absurdity.

The representative agent variant of the microfoundation program is a shot in the foot. What, in turn, follows from this? “There is another alternative: to formulate a completely new research program and conceptual approach. As we have seen, this is often spoken of, but there is still no indication of what it might mean.” (Ingrao and Israel, 1990, p. 362)

So, what could it possibly mean? Hmmm? Which part of ‘a completely new research program’ is unclear?

It simply follows that it is a waste of time to either defend or to attack the microfoundation program because we now know for sure that it was already dead when Jevons/Walras/Menger started it. As a matter of principle, NO way leads from the understanding of individual behavior to the understanding of how the economic system works.*

It is not worth the time to criticize again and again different aspects of Orthodoxy because the program is as meaty as Geo-centrism in physics. Yet, what is even worse than ‘finicky scholasticism — getting tied up in little assertions or minor criticism (Popper)’ is to resort to the conclusion that Orthodoxy does not meet well-defined scientific criteria, yet that it is, given the complexity/uncertainty of the subject matter, the best that could be achieved. This, unfortunately, seems to be the resignative deadlock between Orthodoxy and Heterodoxy “Yet most economists neither seek alternative theories nor believe that they can be found.” (Hausman, 1992, p. 248)

Economics is caught in a cul-de-sac and the representative economist has no idea how to get out: “… we may say that … the omnipresence of a certain point of view is not a sign of excellence or an indication that the truth or part of the truth has at last been found. It is, rather, the indication of a failure of reason to find suitable alternatives which might be used to transcend an accidental intermediate stage of our knowledge.” (Feyerabend, 2004, p. 72)

This self-paralysis explains why economics is still a proto-science. Strictly speaking, after the SMD proof there remained only one worthwhile talking point: what does the new paradigm look like? It should be clear by now that the representative agent has been a senseless detour. Nothing less than the full replacement of the axiomatic foundations of standard economics will do.

Egmont Kakarot-Handtke

References

Arrow, K. J. (1994). Methodological Individualism and Social Knowledge.

American Economic Review, Papers and Proceedings, 84(2): 1–9. URL http://www.jstor.org/stable/2117792.

Feyerabend, P. K. (2004). Problems of Empiricism. Cambridge: Cambridge

University Press.

Hausman, D. M. (1992). The Inexact and Separate Science of Economics. Cambridge: Cambridge University Press.

Ingrao, B., and Israel, G. (1990). The Invisible Hand. Economic Equilibrium in the History of Science. Cambridge, MA, London: MIT Press.

* For details see ‘The Science-of-Man fallacy’

http://axecorg.blogspot.de/2015/10/the-science-of-man-fallacy.html

LikeLike

My last link above (the broken one) should have been this:

http://informationtransfereconomics.blogspot.com/2015/10/emergent-representative-agents-means-to.html

LikeLike

Also, you might be interested in an article I found through Brad DeLong’s blog having to do with brain science … by someone who calls herself the “Math Babe”… Lol. She thinks (like you David) that the brain modelers are overstating their progress so far. Here it is:

http://mathbabe.org/2015/10/20/guest-post-dirty-rant-about-the-human-brain-project/

I don’t know if this is a trend or not, but that’s the 3rd blogger calling herself a “babe” that I’ve become aware of in the past three months… the other two are the “food babe” and the “science babe.” Here’s the science babe dismantling the food babe.

LikeLike

David,

I think you are making a mistake in your discussion of representative agents. This quote illustrates the problem:

“representative-agent models suffer from an inherent, and, in my view, fatal, flaw: they can’t explain any real macroeconomic phenomenon, because a macroeconomic phenomenon has to encompass something more than the decision of a single agent”

But representative agent does *not* mean that there is a single agent! RA simply means *identical* agents. In fact, we usually assume (say) a unit continuum of households.

To your examples, one could definitely use a representative agent model to think about the behavior of spectators at a baseball game, or cars in traffic. In fact, this seems a reasonable simplification in these cases. For instance, you could solve for the behavior of a representative spectator/car as a function of the average behavior of the others (fraction of other spectators who are standing; average distance between cars and average speed of other cars).

LikeLike

Egmont, We agree that the representative agent model is a bad research strategy. I don’t think that methodological individualism or the basic neoclassical paradigm are necessarily tied to the representative agent. The world is more complicated than that.

Tom, Thanks, I had seen Jason’s post already, but not mathbabe’s post (actually wasn’t it someone else guest posting on her blog?)

Jonathan, Well, I think that there are different realizations of the representative agent. In Lucas’s original iteration, the representative agent was a central planner with access to the aggregate production technology and resource endowments and preferences. In subsequent iterations, the model was recast in terms of identical agents, so that the optimum solution for one agent was an optimum for all agents. But that seems to me an a priori exclusion of interaction effects. Because all agents are identical, you can aggregate them without changing the solution, you just multiply the solution for one agent by the number of agents. In order to execute your version of the representative agent model for the traffic-congestion or the spectator analysis, you would first have to solve for the average behavior of other agents. But isn’t the point of the representative-agent model to avoid having to solve that problem? However, I do agree that working with a continuum of households does introduce a degree of complexity and interaction effects not found in standard representative-agent models. So there may be some hope for progress in that direction.

LikeLike